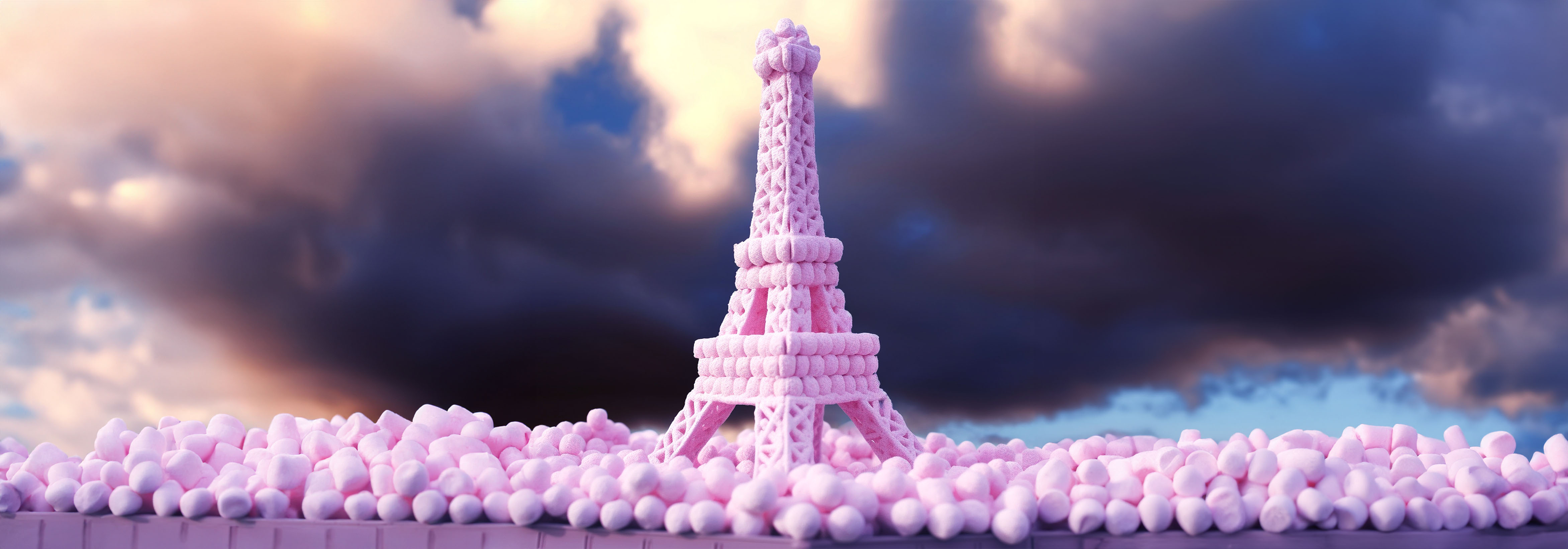

What To Do With an Eiffel Tower Made of Marshmallows?

Do you remember the last time you saw an AI-generated image or answer to a query and thought, “Wow…that is truly unhinged”? Most everyone has encountered an AI hallucination by now, which occurs when an LLM perceives patterns or objects that are nonexistent or imperceptible to us, creating nonsensical, inaccurate outputs. While they can seem funny when the stakes are low, they can have profound implications for an organization’s reputation and the reliability of AI systems.

For that reason, corrective protocols that mitigate AI hallucinations should be a top priority for any organization leveraging these systems.

Error

The Eiffel Tower is 600 meters tall. (It’s 324.)

V.S.

Hallucination

The Eiffel Tower is made of marshmallows.

Before we can implement best practices for mitigating AI hallucinations, however, we have to fully understand the problem. Let’s put your knowledge of this phenomenon to the test with a short quiz!

Think You Know AI Hallucinations?

Fighting AI Hallucinations With ECS

At ECS, we have developed our own LLM and LLM-RAG hallucination/error protocols, which can be broken down into six key steps:

STEP 1

Chain-of-thought/smart prompting: An advanced prompting strategy involving a series of prompts that build on each other, rather than relying on a single, isolated prompt. Smart prompting improves AI performance on a range of arithmetic, common sense, and symbolic reasoning tasks. Our goal is to guide the model through a sequence of thoughts, each building on the previous, to arrive at a complex, high-quality output.

STEP 2

Repeatedly ask the same question “X” times (where X is dependent on computational costs and time considerations) to get multiple outputs (e.g., 100 answers).

STEP 3

By leveraging LLM-RAGs, we ask our model to evaluate uploaded data and documents placed in a vector database. We employ matching technology developed for ECS Pathfinder to determine output accuracy, requiring a 95% match or better. Answers that don’t make the cut are culled from the initial set of outputs.

STEP 4

Surviving answers are embedded into a high-dimensional semantic vector database using a bidirectional encoder (à la BERT) and clustered.

STEP 5

We continuously evaluate emerging iterative frameworks, such as the ReAct framework Reflexion, that enable an LLM to review its answers in a feedback loop, looking for any possible errors or hallucinations. If any are found, those answers are deleted from what is left over from the initial draw from the clustering.

STEP 6

LLMs have been found to exhibit a certain level of “theory of mind,” a complex cognitive capacity that is related to our conscious mind and allows us to infer another’s beliefs and perspective. For the final step, we ask our LLM to use theory of mind to adopt the persona of a topical expert, then from that perspective, determine the best answer from the remaining set.

By following these protocols, organizations can expect to improve the accuracy of their LLMs (and particularly LLM-RAGs) to 95%, greatly minimizing the frequency and impact of AI hallucinations.

Still not sure how to make sense of it all? That’s where ECS comes in. Our data and AI experts can help you derive actionable insights from vast amounts of data, develop AI, and manage the unmanageable.