By Anthony Zech

Senior AI Architect

Over the last three decades of my personal IT journey, I have experienced the full continuum of good and bad ideas, practices, processes, technology and governance efforts. The last few years, however, have brought a technology renaissance of sorts to the forefront thanks to the cumulative effects of adjacent technologies, methodologies, and processes being adapted in support of more effective, efficient and higher value outcomes for our customers and ourselves.

The most significant of these include:

Technology

Hyperconverged hardware and virtualization of hosts, networks, storage and security allowing a lower cost, utility infrastructure that promotes the ability to define whole systems within software.

Methodology

Scalable management methodologies which fuse rapid development in the form of agile and lean practices, like the Scaled Agile Framework for enterprises (SAFe), combined with continuous improvement and collaboration practices that better facilitate large, often distributed teams’ ability to solve complex challenges

Process

Widespread automation of processes and procedures to standardize and enhance the streamlined buildout of infrastructure, allow for rapid, consistent testing of applications and security and most importantly, increase the resilience of complex systems by limiting the effects of human error.

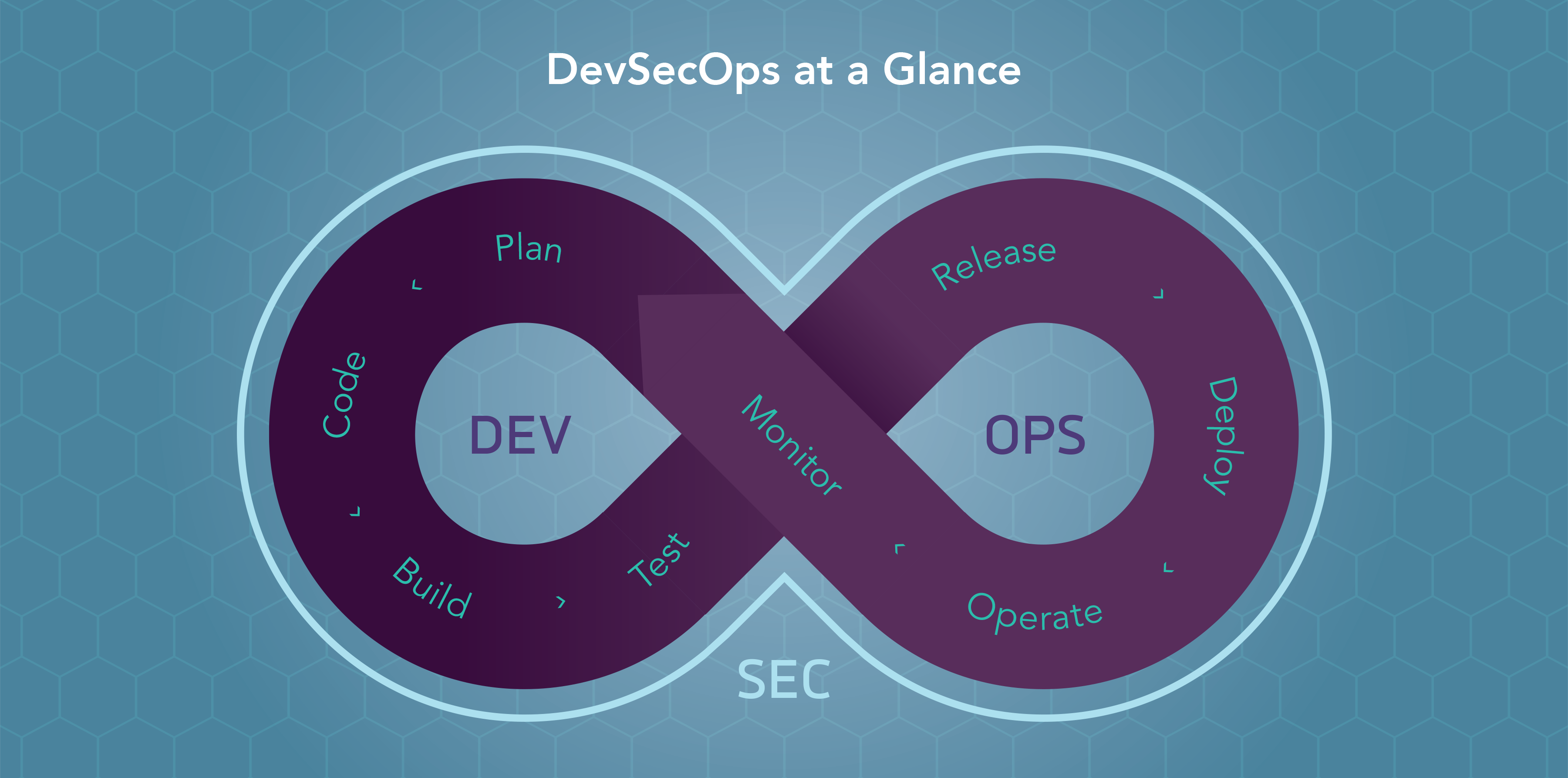

Together these capabilities form the underpinning of DevSecOps. As the operational research suggests (DORA, State of DevOps 2019, https://cloud.google.com/devops/), this way forward certainly enables successful planning and execution of large-scale system modernization and transformation efforts and provides clear mechanisms to assure mission/business focus to deliver value to all stakeholders.

Yet why is there so much resistance in application of these processes in government systems? It comes down to scale, complexity, understanding, awareness and trust. Most government systems are very large, and complex having evolved over multiple generations. There are usually not many resources still available who understand the original design tradeoffs and the uniqueness of each system’s evolution. In many cases, there is a long history of feature and integration delays, challenges and occasional failures inherent in the current systems. They provide high visibility mission critical services and there is a strong sense of “if it’s not broke, don’t fix it”—except typically they are too costly to continue to maintain this current status quo. To effectively harness the power of DevSecOps, this is where systems engineering, and architecture come back into play.

In the case of architecture, it allows for a common set of conversations to occur between IT solutions providers, the business owners and the finance teams. Architects now have the primary responsibility to lay down the general rules for which IT may operate, and when and if exceptions to those rules can be acceptable to the organization to ensure a more efficient and affordable path to modernization/ transformation for the next generation of systems to follow.

Engineering today at the speed of Agile/DevSecOps now has a greater responsibility to enforce best practices, like secure software development, design for resiliency and continuous improvement in operations through practices like ITILv4 and site reliability engineering (SRE). In many large government systems, engineering must also ensure the harmonization of both the software supply chain and the hardware supply chain inherent with cyber physical systems. Engineering at all steps in the process must make sure that both (hardware and software) stay aligned, patched, tested and compliant with all governing laws, policies and mandates. The NIST SP- 800-160 volumes 1, 2 and the forthcoming 3 provide ample guidance and support for systems engineering best practices. The guidance and best practices are readily available, but there is an art to the application of the science. As Andrew Jassy, CEO of Amazon Web Services has been saying for some time now, “there is no compression algorithm for experience.”